From AI prompts to policy puzzles, AAO’s futurists explored what happens when technology meets tenacity…and why human judgement still has the sharpest vision of all.

If ophthalmology had a crystal ball, it would probably come with built-in OCT and real-time AI analytics. Luckily, one symposium on Day 3 of the American Academy of Ophthalmology Annual Meeting 2025 (AAO 2025) did the next best thing—gathering some of the sharpest minds in the field to peek into the future of eye care.

From fairness in AI to international therapy red tape, the session offered a panoramic view of where innovation, policy and good ol’ human judgment collide.

Prompting progress

If you’ve ever wished your AI could write your advocacy plan and your grocery list, Dr. Michael Levitt (USA) might just make that dream come true. His presentation paired generative AI with design thinking, two buzzwords that—when combined correctly—might just turn apathy into advocacy.

“Generative AI users start by typing a prompt. AI responsive encodes training data. Here’s the problem: prompt AI for ways to motivate ophthalmologists for grassroots advocacy, you’ll get generic answers,” Dr. Levitt explained. “We’ll pair specialized AI prompt patterns with design thinking and create a problem-solving framework to spark more tailored ideas.”

He then walked the audience through a systematic design thinking process, AI-enhanced edition. The steps were familiar to anyone who’s ever scribbled ideas on sticky notes: empathize, define, ideate, iterate, evaluate, prototype and validate. But with AI in the mix, each stage got an extra boost of brainstorming power.

READ MORE: When AI meets Dr. Kenneth Goodman: Ethics in Focus at AAO 2025

From this hybrid method emerged several clever advocacy concepts, the standout being the Resident Advocacy Learning Pods, a six-month pilot program featuring small resident groups led by Academy mentors.

After putting the AI-assisted concepts through several rounds of testing (and, presumably, a few cups of coffee), the team refined their idea even further. “The program should aim to shape physician identity around clinical care and advocacy to drive future behavior,” Dr. Levitt said. “A uniform curriculum delivers action-based modules of ready-to-use tools to streamline participation backed by mentor onboarding to ensure consistency and quality.”

In the end, Dr. Levitt summed up his philosophy with a line worthy of an AI-generated fortune cookie: “Stack those patterns with design thinking and you just might accelerate your capacity to innovate.” In other words, the revolution will not only be automated, it’ll be empathetically designed.

Fairness first

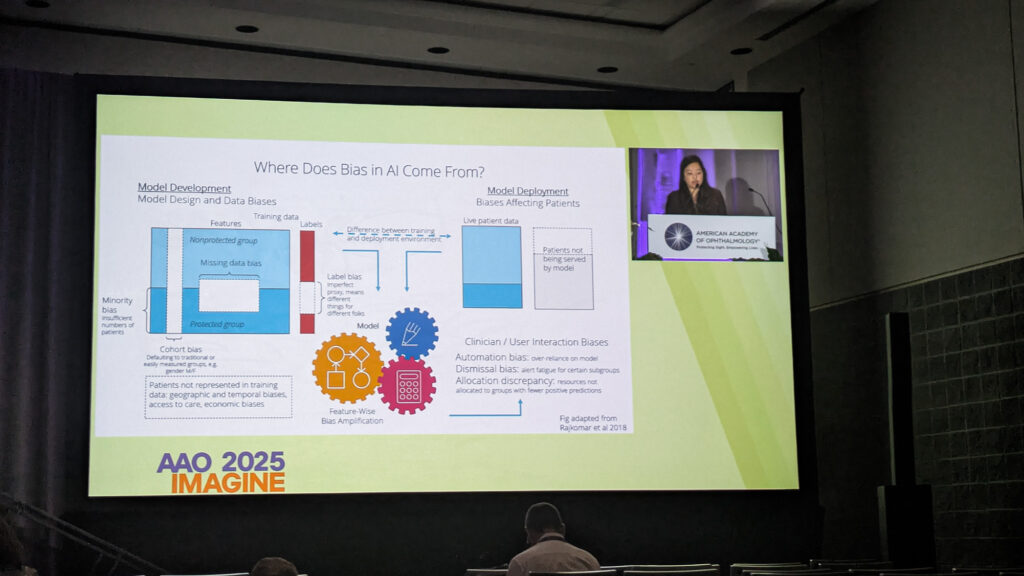

Artificial intelligence may be the shiny new toy in ophthalmology, but as Dr. Sophia Wang (USA) reminded everyone, it sometimes forgets to play fair.

“There’s a lot of hope that AI algorithms can be used to improve ophthalmic diagnosis or treatment. But you can imagine that if this kind of algorithm is biased or unfair, it could magnify or perpetuate the inequities in ophthalmic care, such that minorities end up receiving even worse care,” Dr. Wang explained.

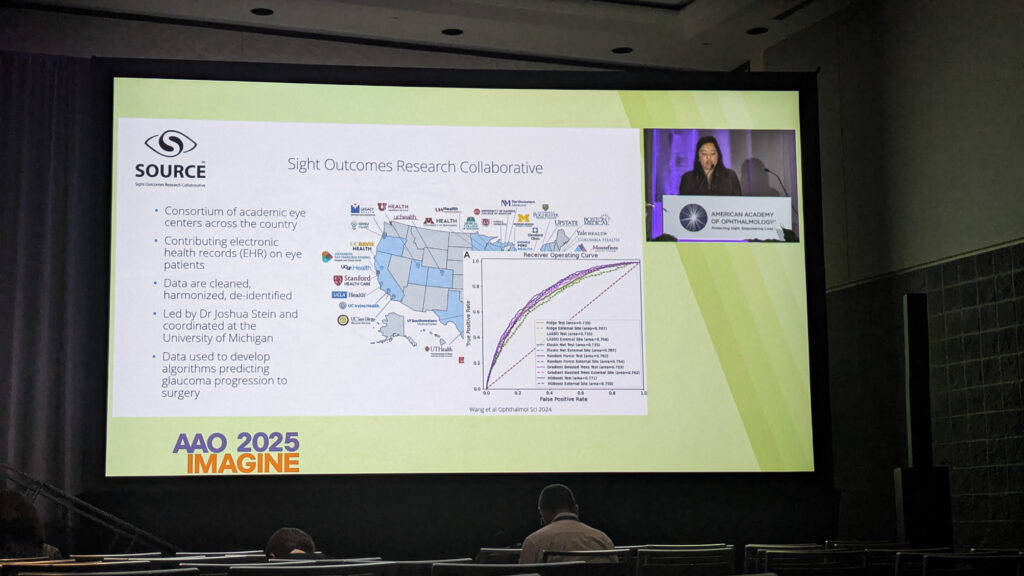

Her team decided to run the numbers on bias itself, testing algorithms that predict which glaucoma patients might progress to incisional surgery. The data came from the Site Outcomes Research Collaborative (SOURCE), a national database pulling de-identified electronic health records from 23 academic eye centers across the United States.*

“We definitely found evidence of bias in our models. The performance was different across different subgroups of patients, but we had to look for it pretty carefully to appreciate it,” Dr. Wang revealed. “So I think that fairness evaluation for AI models is a must. And we as users have to demand these types of evaluations in order to feel that models can be trustworthy.”

Her results showed that fairness is no one-size-fits-all spectacle. “A fair approach for modeling in one site is not necessarily going to be fair somewhere else,” she noted, describing how performance shifted across demographic datasets. The lesson? When building AI, start with diversity in mind, because no amount of algorithmic eyewash can fix bias after the fact.

READ MORE: Modern Glaucoma Paradigms Under Scrutiny at AAO 2025

Across borders and bureaucracies

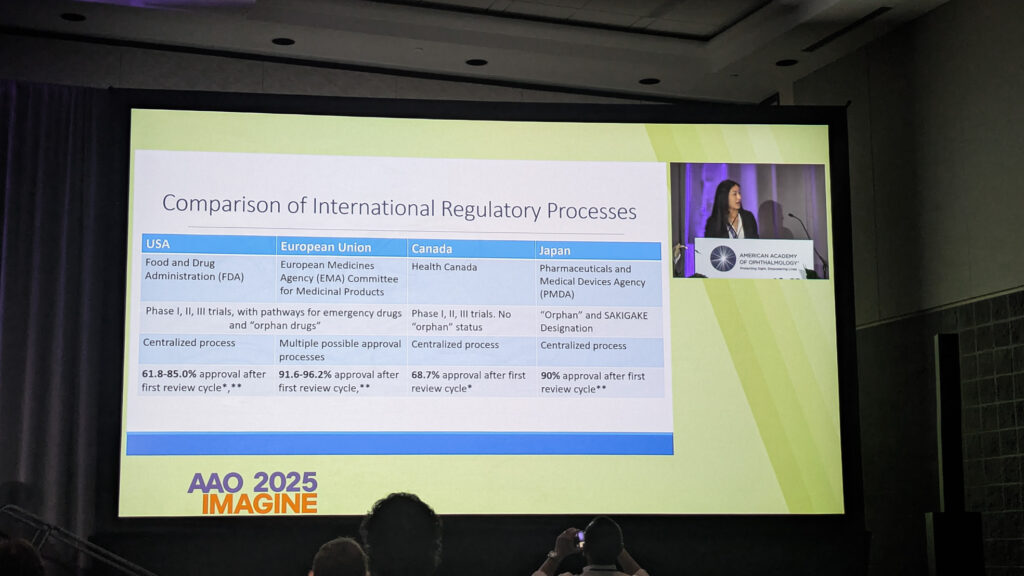

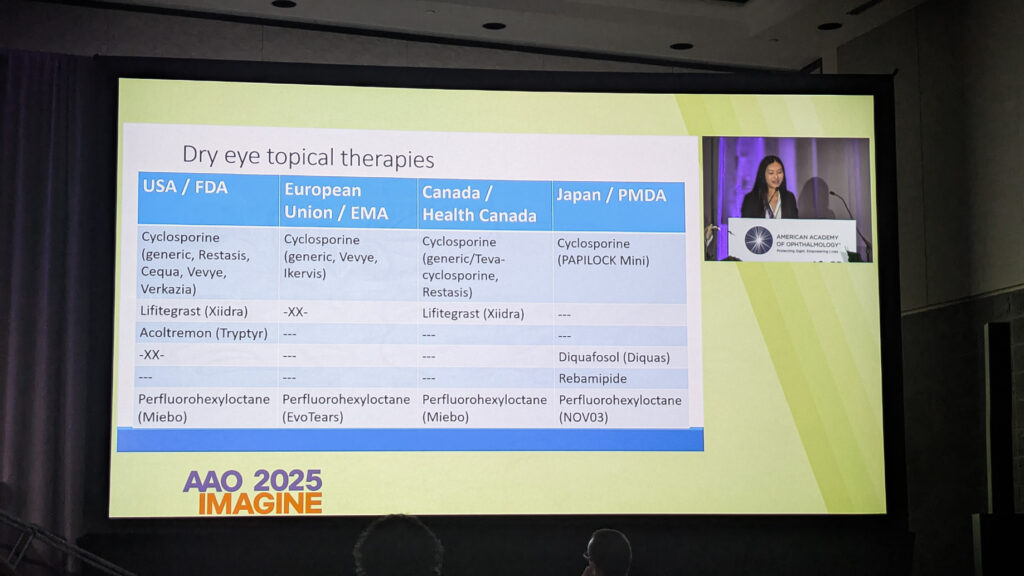

Then came Dr. Weijie Violet Lin (USA), ready to take everyone on a whistle-stop world tour of regulatory bodies—and why some up-and-coming treatments are still waiting for their passports.

She compared drug and device approval processes across nations to explain why certain treatments get the green light in one country and the red tape in another. “The median approval time for drug approval process is similar in the U.S. versus other developed countries,” Dr. Lin noted. However, “the first cycle approval rates do vary, and this may account for some differences in available therapies in different countries.”

Her case in point: dry eye therapies. While Japan’s market features mucin secretagogues, those same treatments haven’t migrated elsewhere, thanks not to rejection but to non-submission. Turns out, bureaucracy can sometimes be the biggest barrier to clear vision.

Who’s minding the machine

Just as the audience started wondering if AI might take over the world (or at least the clinic), Dr. David Aizuss (USA), ophthalmologist and board chair of the American Medical Association (AMA), stepped up to restore some balance.

“As powerful as AI is, it is not perfect,” Dr. Aizuss warned. “It can sometimes make mistakes, reflect biases hidden in its training data or be misused in ways that limit care instead of improving care, as we see with prior authorization.”

He reminded attendees that the AMA prefers the term “augmented intelligence,” a friendly nudge that the “I” in AI shouldn’t stand for “independent.”

“Technology has an important role to play in improving patient outcomes, but the human connections that are so important in ophthalmology, every physician specialty, are critically important,” he said.

Physician sentiment seems to be catching up to the tech. “A growing majority of physicians now recognize AI’s benefits, with 68% in 2024 reporting at least some advantage in patient care, up from 63% the prior year,” Dr. Aizuss noted. “Usage of AI nearly doubled to 66% from 38% the prior year in 2024.”

To ensure that AI serves patients, the AMA has outlined a detailed policy roadmap, including physician-led oversight boards, mandatory real-world testing and transparency about data sources. As Dr. Aizuss summed up, “Voluntary agreements or voluntary compliance by AI developers is not sufficient.” Translation: trust is earned, not coded.

The takeaway

If one theme united the session, it was that ophthalmology is standing at a crossroads where innovation meets integrity…and both need each other to see clearly.

As Dr. Wang put it best, “Fairness is more than just the mathematical definitions. We get to decide as a society what is fair and how we get to equity in ophthalmology and what role AI plays in the process.”

So, while algorithms may sharpen our diagnostics and policies may widen our reach, the soul of ophthalmology still lies in human vision.

*Wang SY, Ravindranath R, Stein JD; SOURCE Consortium. Prediction Models for Glaucoma in a Multicenter Electronic Health Records Consortium: The Sight Outcomes Research Collaborative. Ophthalmol Sci. 2023;4(3):100445.

Editor’s Note: The American Academy of Ophthalmology Annual Meeting 2025 (AAO 2025) is being held on 17-20 October 2025, in Orlando, Florida. Reporting for this story took place during the event. This content is intended exclusively for healthcare professionals. It is not intended for the general public. Products or therapies discussed may not be registered or approved in all jurisdictions, including Singapore.